Video editing used to feel like juggling five jobs at once; writing, planning, organizing, and then actually putting it all together. Now, Claude 3.7 Sonnet steps in like your all-in-one creative teammate. This version of Claude AI isn't just smart, it's got serious storytelling skills that make planning and scripting videos way easier.

With the right tools in place, turning ideas into polished videos is no longer a headache. Keep reading to see how to use Claude to streamline your video projects from start to finish.

Part 1. What You Should Know About Claude 3.7 Sonnet

For those who don't know, Claude is part of a growing line of AI models built by Anthropic, a company started by ex-OpenAI researchers that builds safe and helpful AI tools. The first version of Anthropics Claude, Claude 1, was released in March 2023.

Since then, Claude AI has kept getting smarter with each upgrade. By February 2025, they rolled out Claude 3.7 Sonnet, and it's easily one of their smartest updates yet. Here's what sets it apart from earlier versions:

- It combines hybrid reasoning so you get quick answers that still go deep.

- It handles massive context windows, perfect for long video scripts or outlines.

- It's better at tracking big-picture ideas, so your whole project stays on point.

Because of these upgrades, Claude 3.7 Sonnet is great for all kinds of real work. Including:

- Code Writing: Claude helps you write clean code fast, fix errors, and even explain tricky parts in plain language.

- Script Generation: From video scripts to voiceovers, Claude AI makes it easy to turn rough ideas into ready-to-use content.

- Business Logic: Whether it's breaking down workflows, drafting reports, or planning strategies, Anthropics Claude keeps your projects clear and on track.

Part 2. How Claude 3.7 Sonnet Takes on Multiple Creative Roles

With all these features packed in, Claude 3.7 Sonnet acts like a full-on creative partner who sees the big picture and makes smart choices that keep your work moving. It can plan, organize, and guide full video projects from start to finish.

To show you what that looks like in action, take a look at the table below and see how Claude 3.7 Sonnet takes on multiple creative roles:

| Claude's Role | What It Does | How It Helps with Visual Generation |

| Project Planner | Organizes workflow, timelines, and asset checklists. | Keeps visual production on track by helping you map when and where visuals should be created or added. |

| Scriptwriter | Writes clear, structured scripts with character cues, pacing, and dialogue. | Provides scene breakdowns and detailed visual prompts for image or video generators to follow. |

| Creative Director | Sets the tone, pacing, and stylistic direction of the project. | Suggests visual styles, mood boards, color palettes, and camera movement references for consistency. |

| Storyboard Assistant | Turns rough outlines into step-by-step visual scene planning. | Converts text descriptions into visual cues for layout, shot framing, and animation support. |

| Content Refiner | Improves drafts, rewrites awkward sections, and polishes visual instructions. | Make sure prompts for AI visuals are clear, creative, and aligned with the story. |

As shown in the table, Claude 3.7 Sonnet doesn't make images or videos by itself, but it's great at giving clear prompts, visual cues, and smart outlines to guide other tools.

For the actual visual creation, you can team up Claude's planning power with an editor like Filmora. With features like AI Image to Video, Text to Video, and the AI Copilot Editing, turning your ideas into real content feels simple and fast.

Part 3. From Script to Screen: Team Up Claude 3.7 Sonnet with Filmora

Now that you know Claude 3.7 Sonnet can help you map everything out, it's time to turn that plan into something you can actually watch. Like mentioned above, Wondershare Filmora steps in as the perfect creative partner. While Claude handles the structure, long-form planning, and smart revisions, Filmora jumps in with easy tools and AI-powered features to create your video.

Here's how Filmora makes it all happen:

By combining these two tools, you can create a Claude video that feels well-planned and visually polished from start to finish. So let's learn how to use Claude AI as your behind-the-scenes director while Filmora brings it all to life on screen. Check out the simple step-by-step guide below:

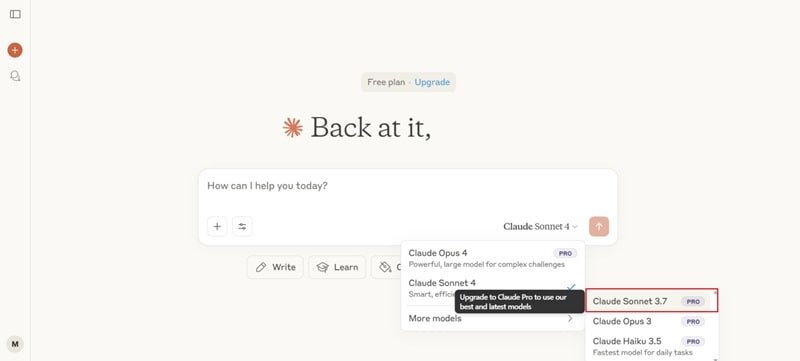

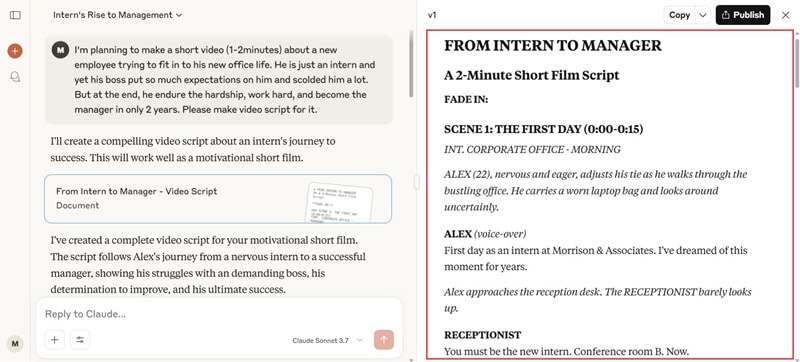

Step 1. Ask Claude for a Script or Video Plan

You can start by heading over to the Claude site. Log in to your account or sign up if you're new. Switch the model to Claude 3.7 Sonnet to get the latest features. Just a heads up, this version is only available with the Pro plan, so you'll need to upgrade if you want to use it.

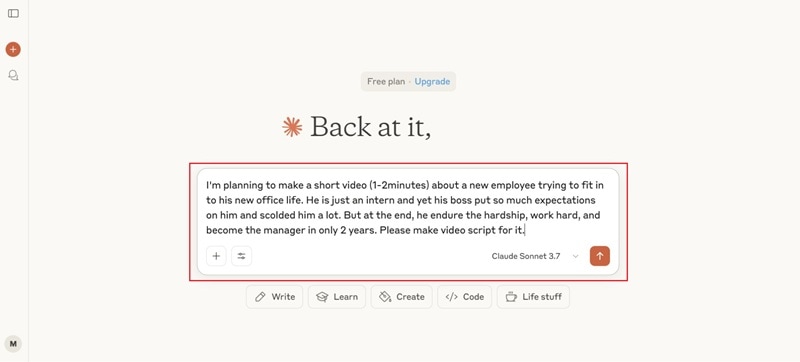

Next, just type what you need in the description box. For example, you can ask Claude 3.7 Sonnet to act as the scriptwriter for your video. Check out the sample prompt below to get an idea. Once it looks good, hit Enter and let Claude do its thing.

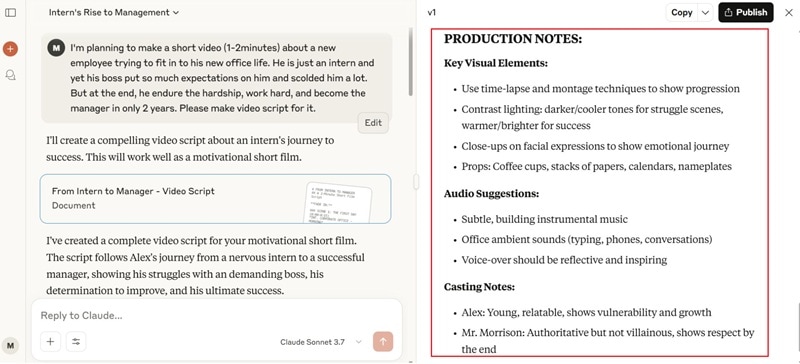

- Claude will generate a full video script.

- You can scroll down for extra production notes like key visuals, audio ideas, and casting suggestions.

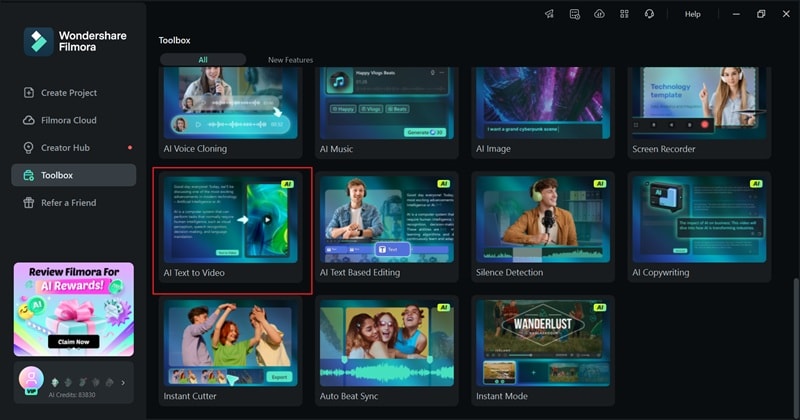

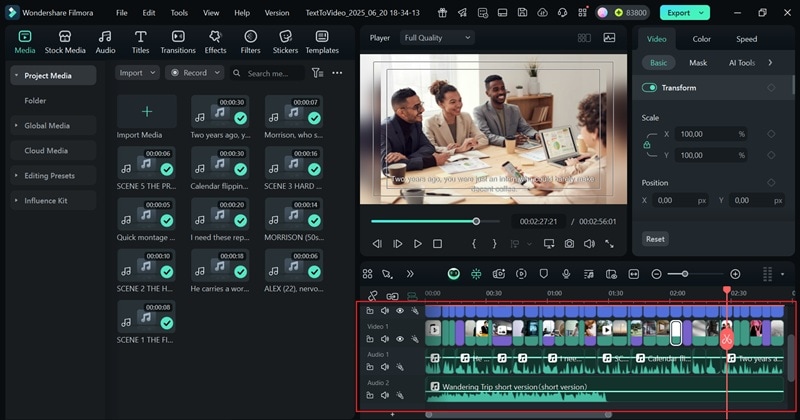

Step 2. Open Filmora and Use the Text to Video Feature

Now it's time to bring that script to life using Filmora. Just download and install the latest version of Filmora, open the app, go to "Toolbox" and click "Text to Video".

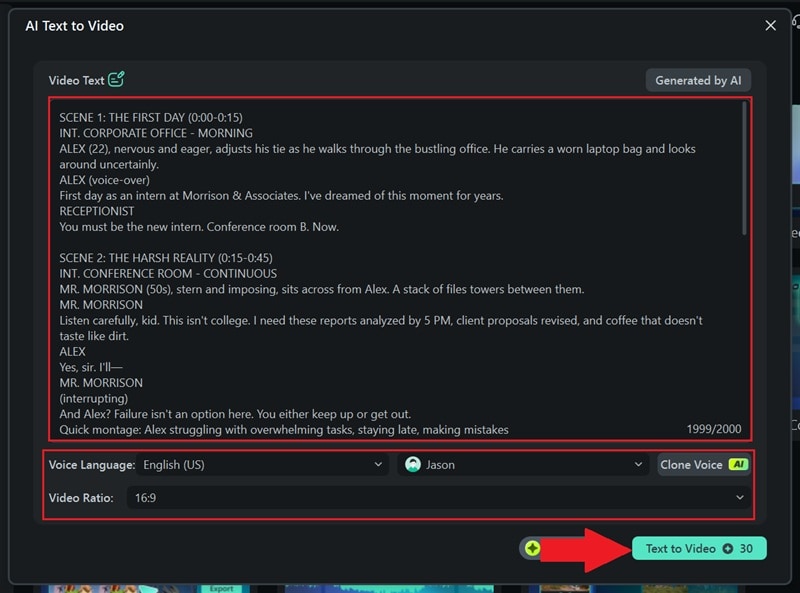

On the next screen, paste the script you got from Claude. You can customize:

- Language

- Video format

- Voice tone

Click "Text to Video" and let Filmora generate your draft video.

Step 3. Style the Video Based on Claude's Suggestions

Once the video is generated, it appears on the timeline. Hit play to preview it.

- Claude's suggestions (music, voiceovers, clips) are auto-applied.

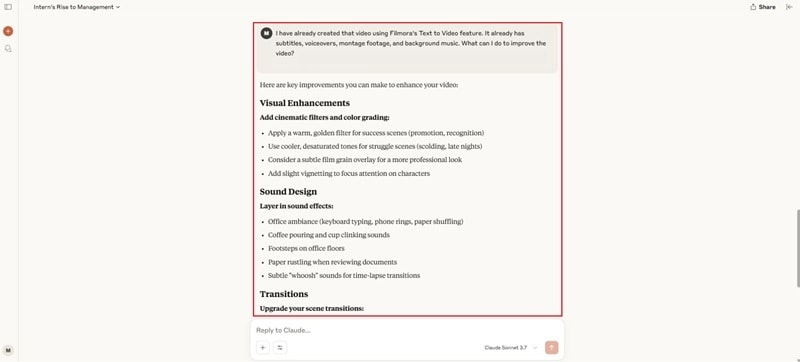

- You can go back to Claude for more scene-specific advice.

To add filters, go to the "Filters" tab, drag your favorites to the timeline, and tweak the look in the settings panel.

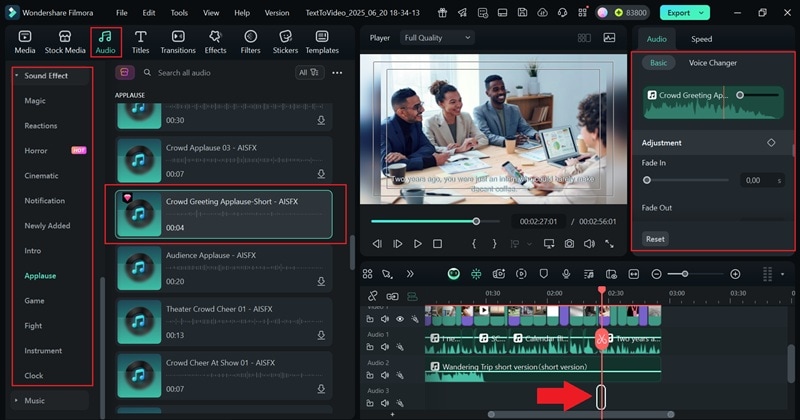

Next, enhance your video with sound effects:

- Go to the "Audio" tab → "Sound Effects" → "Applause".

- Drag and drop a clip onto the timeline, then adjust length and volume.

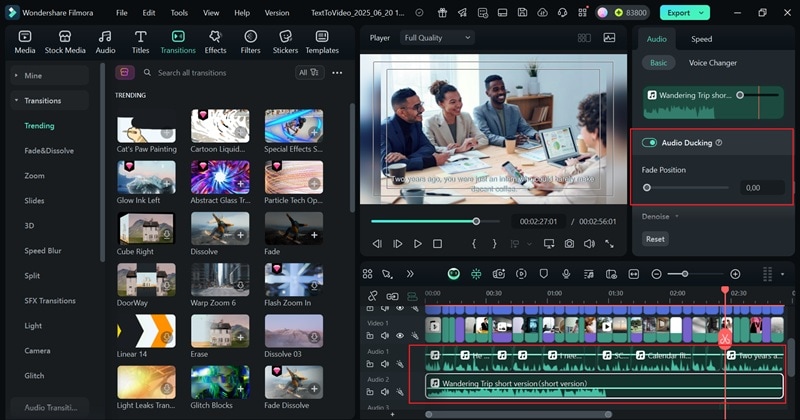

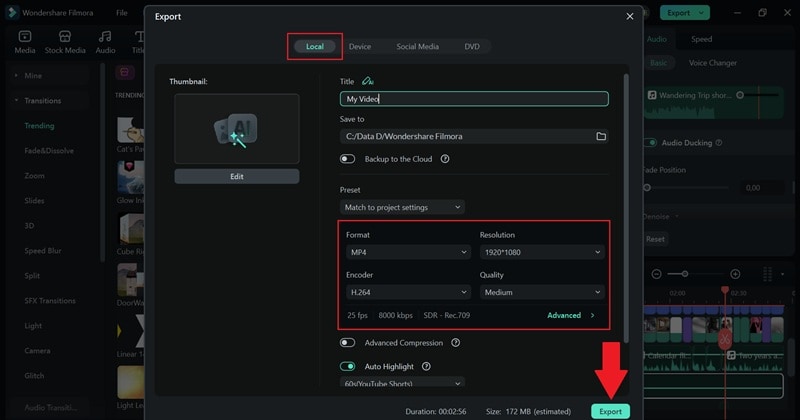

Step 4. Polish and Export

Before exporting, polish your audio using Filmora's Audio Ducking feature. This ensures voice and background music don't clash.

Finally, hit "Export" to save or share your video:

- Select "Local" or "Social Media"

- Choose format and quality

- Name the file and save it

Extra AI Features in Filmora You Can Pair with Claude 3.7 Sonnet

As you've seen, pairing Claude AI with Filmora makes it easy to create videos that are clear, organized, and creatively planned. Not to mention, Filmora's Text to Video feature is just one of the many tools you can use. Here are some other Filmora features that you can combine with Claude 3.7 Sonnet:

1. AI Idea to Video: Turn Prompts into Structured Scenes

Use Claude to write a detailed description of your video idea — including scene transitions, tone, or pacing. Then:

- Paste that into Filmora's AI Idea to Video feature.

- Filmora will automatically generate a multi-scene short video with smooth flow and logical structure.

- You can fine-tune scenes in the timeline if needed.

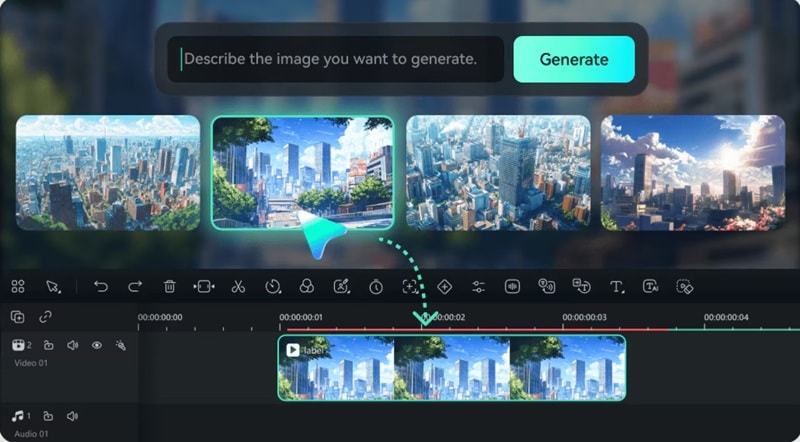

2. AI Image Generator: Visualize Claude's Descriptions

Let Claude help you craft visual prompts. For example:

- Ask Claude 3.7 Sonnet to generate a strong image prompt with detailed reasoning.

- Copy that prompt into Filmora's AI Image Generator.

- Filmora will give you four image options. Choose your favorite and save it.

3. Image to Video: Animate Your AI-Generated Visuals

Once you have your AI image ready:

- Go to Filmora's AI Image to Video section.

- Select a creative animation template.

- Upload your image, generate the video, and polish it in the timeline.

- Save your final animated clip — a smooth, stylized visual brought to life from Claude's prompt.

By combining Claude 3.7 Sonnet's advanced reasoning with Filmora's AI creative tools, you get a powerful workflow for turning ideas into polished, dynamic content — with minimal manual effort.

Conclusion

Anthropics Claude just rolled out its upgraded AI model called Claude 3.7 Sonnet, and it can do a lot more than you might expect. So, we took a closer look at this powerful Claude AI to see what it can really do.

Turns out, Claude 3.7 Sonnet is a pro at handling structure, writing scripts, guiding style, and helping plan full video projects from start to finish. When you team it up with an all-in-one editor like Wondershare Filmora, creating a clear and creative video feels easy. Claude gives the direction, and Filmora delivers the magic with smart tools like AI Text to Video and AI Image to Video.

100% Security Verified | No Subscription Required | No Malware

100% Security Verified | No Subscription Required | No Malware