In this article

What is Gemini

When talking about artificial intelligence (AI), it's impossible to leave out one of the biggest names shaping the space: Gemini. Gemini marks Google's bold move into the new wave of the AI era, which rapidly shapes everything from how we search to how we create.

If you don't want to feel out of the loop, let's start by familiarizing yourself with what a Google Gemini is.

Gemini (previously known as Bard) is Google's latest family of AI models designed to handle multiple types of information at once. Rather than working only with text like a standard large language model (LLM), Gemini is a multimodal system, meaning it can understand and generate content from images, audio, video, and even code.

Because "Gemini" refers to more than one thing in Google's AI ecosystem, people searching for what Gemini AI is may find that the name points to different products depending on the context.

- Gemini, the family of multimodal AI models that power Google's apps, products, and developer tools.

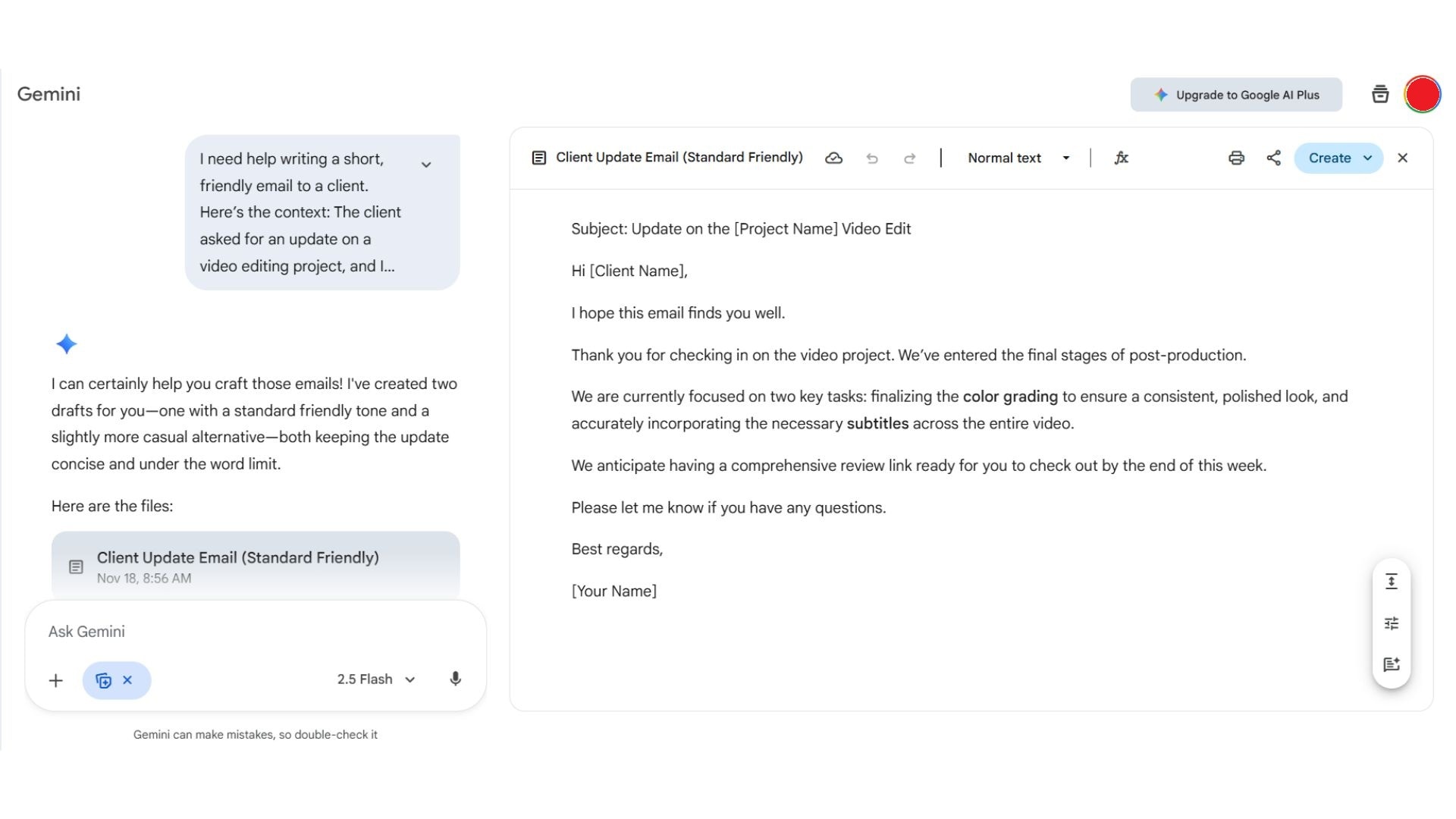

- Google's chatbot interface that runs on these models, which replaced Bard and can also generate images.

- The new AI assistant that is rolling out on Android phones (especially Google Pixel), Wear OS watches, Android Auto, and Google TV.

- Gemini for Google Workspace, which adds AI-powered help to Gmail, Docs, Sheets, Slides, and other paid Workspace tools.

With Google working to weave Gemini into nearly all of its products, everything technically falls under the Gemini umbrella. However, each tool still serves a different role in Google's growing AI ecosystem.

Google Gemini Models:

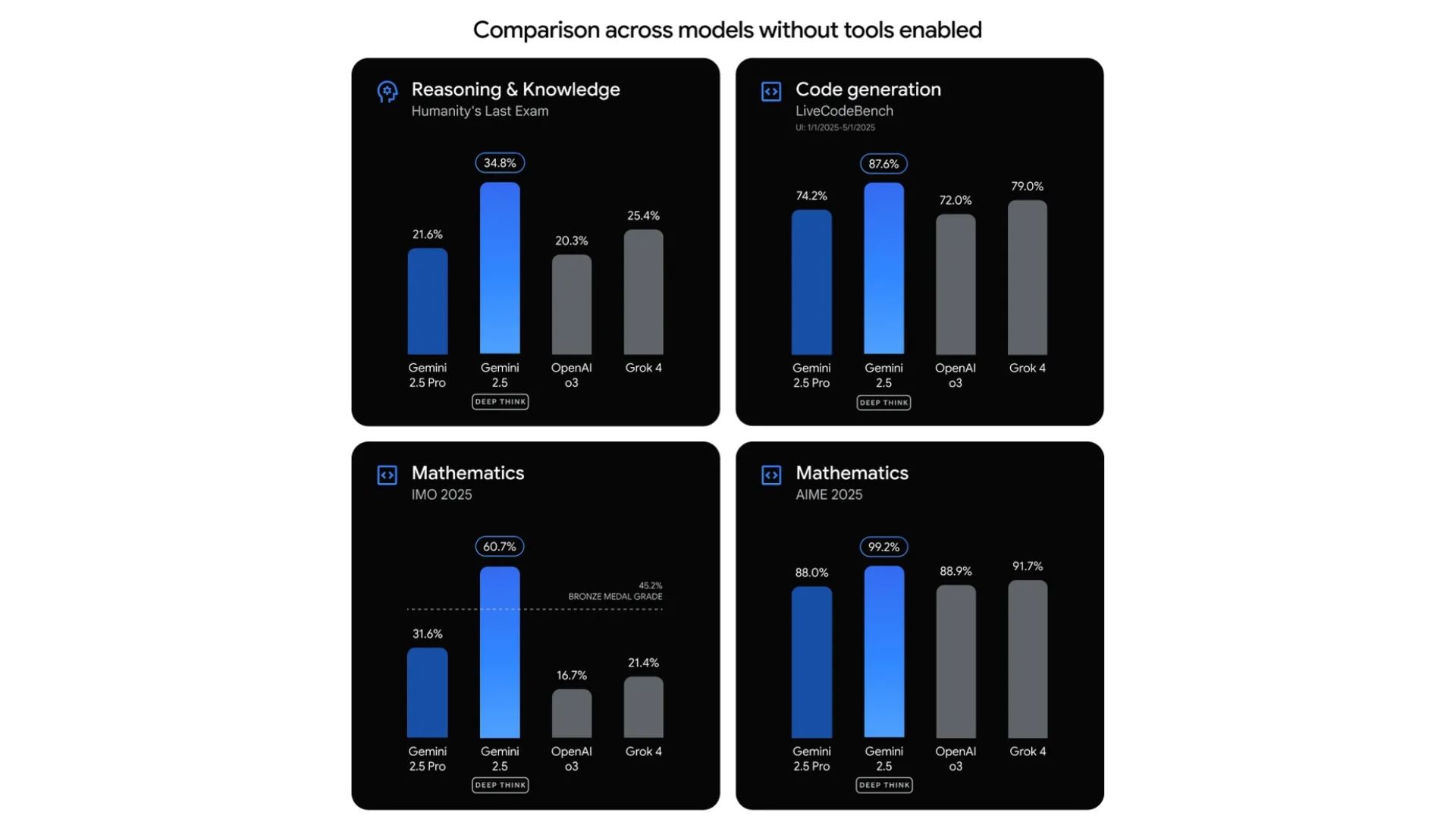

As of now, Gemini is already in the 2.5 generation, with the Gemini 3.0 release rumored to be just around the corner. The core advancement in the Gemini 2.5 series is the introduction of its reasoning capabilities, which Google refers to as "thinking."

The lineup is divided into several model tiers, though this structure keeps changing as Google updates them. This differentiation is largely determined by its parameter size, which affects how well it handles complex tasks.

- Gemini 2.5 Pro: This is Google's most capable flagship model, designed for deep reasoning, complex problem-solving, and advanced coding. It prioritizes accuracy and analytical depth over raw speed. It excels at tasks requiring multi-step logic, processing vast datasets (up to 1 million tokens), and multimodal analysis (text, image, audio, video).

- Gemini 2.5 Flash: The fast and efficient model in the lineup. It maintains strong performance but is optimized for high-volume, low-latency tasks that prioritize speed and cost-effectiveness.

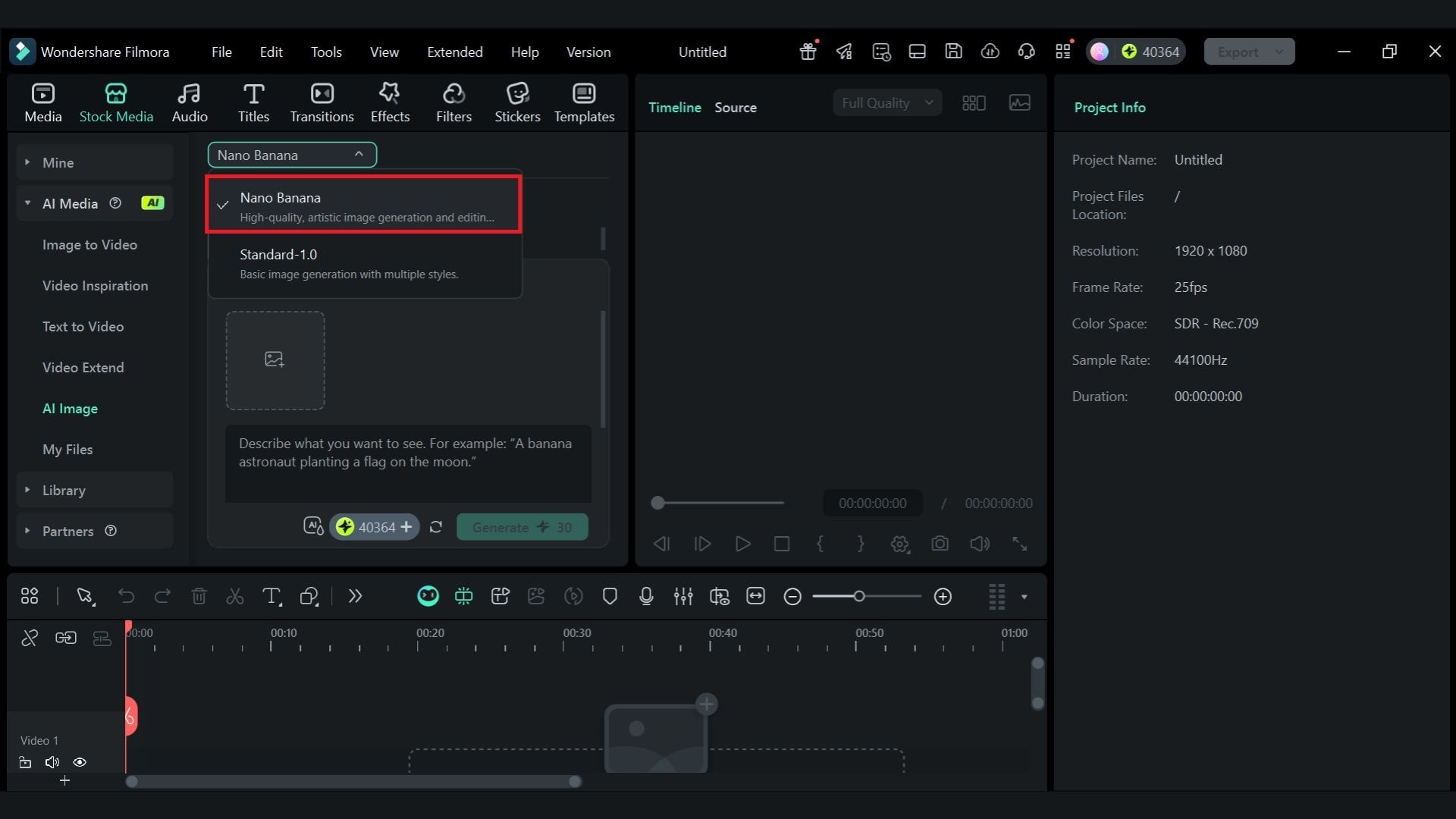

- Gemini 2.5 Flash Image (a.k.a. "Nano Banana"): This is a specialized model built for high-quality image generation and editing. It's built on the speed of Flash but is enhanced with features like prompt-based image editing, character consistency across generations, and multi-image fusion.

- Gemini 2.5 Flash-Lite: The most cost-efficient and fastest variant in the family, primarily designed for ultra-low latency and high-concurrency tasks with a focus on efficiency. It offers a lightweight reasoning model ideal for high-volume, simple operational workloads.

| Model | Pro | Flash | Flash Image | Flash-Lite |

| Multimodal Input | Text, Code, Image, Video, Audio, PDF | Text, Code, JSON | Text, Image, Code, PDF | Text, Code, Image, Video, Audio, PDF |

| Output Type | Text, Code, JSON | Text, Code, JSON | Image, Text | Text, Code, JSON |

| Intended Use | Most advanced reasoning; complex problem-solving; advanced coding; deep analysis. | Fast performance on everyday, high-volume tasks; chat applications; summarization. | Rapid creative workflows; high-quality, prompt-based image generation and editing. | High-volume, cost-efficient tasks; classification; simple routing; low-latency batch operations. |

| Thinking Mode | ✅ | ✅ | ❌ | ❌ |

| Relative Speed | Slower | Fast | Fast | Fastest |

Older Gemini Models

Before reaching this stage, Gemini went through several earlier versions that helped shape the system into what it is today.

- Gemini 1.0 Ultra: This was Google's first flagship Gemini model. Ultra is focused on heavy multimodal reasoning, complex tasks, and advanced problem-solving.

- Gemini 1.0 Nano: Nano was the most efficient and smallest model, specifically designed for on-device performance. It powers features directly on smartphones (like Pixel) and other hardware.

- Gemini 1.5 Pro and 1.5 Flash: The next-generation model built for breakthrough performance. Pro was designed as a strong, all-purpose model with a massive context window, while the Gemini 1.5 Flash was a lighter, faster version.

Key Features/Core Capabilities of Gemini

If you're wondering what the Gemini app is used for, the answer is: a lot. The following are the most common and useful features of Gemini AI and how it can help you:

Technical Specifications:

To handle complex multimodal tasks, Gemini is trained on large-scale multilingual and multimodal datasets. It's supported by years of development from Google DeepMind and Google Research, with specifications as follows:

- Model Type: Transformer-based LLM

- Training Data: 750 GB of data (1.56 trillion words)

- Availability: Access to Gemini is provided through the Gemini App, Google Workspace, the Gemini API (Google AI Studio), and Vertex AI (Google Cloud).

- Context Window: Up to 1 Million tokens (a token = a chunk of text, like a word or part of a word).

Application Section - When/Where to Use Gemini

Since Gemini is a multimodal AI model designed to handle multiple media, the use cases of Gemini span across many industries, depending on how you want to use it.

How Gemini is Commonly Used for

- Marketing & Advertising: Gemini can support marketing teams in many ways, from generating blog ideas and writing copy to producing custom visuals.

A good example is the "impossible ad" made for Slice, a healthy soda brand, where BarkleyOKRP used Gemini 2.5 Pro and Google's generative media tools to build a full AI-driven retro radio station. The workflow looked like this:

- Gemini wrote the 80s/90s-style lyrics, character stories, and DJ lines.

- Imagen and Veo handled the visuals,

- Lyria created lo-fi background music, and

- Chirp generated the radio voices.

- Education & Training: Educators, students, and staff use Gemini to speed up lesson planning, brainstorm new ideas, and learn with more confidence. It can help create lesson plans, adapt materials for different learning levels, and generate assessments or practice activities in minutes.

Across the U.S., over 1,000 higher-education institutions have already integrated Gemini for Education into their academic and administrative systems.

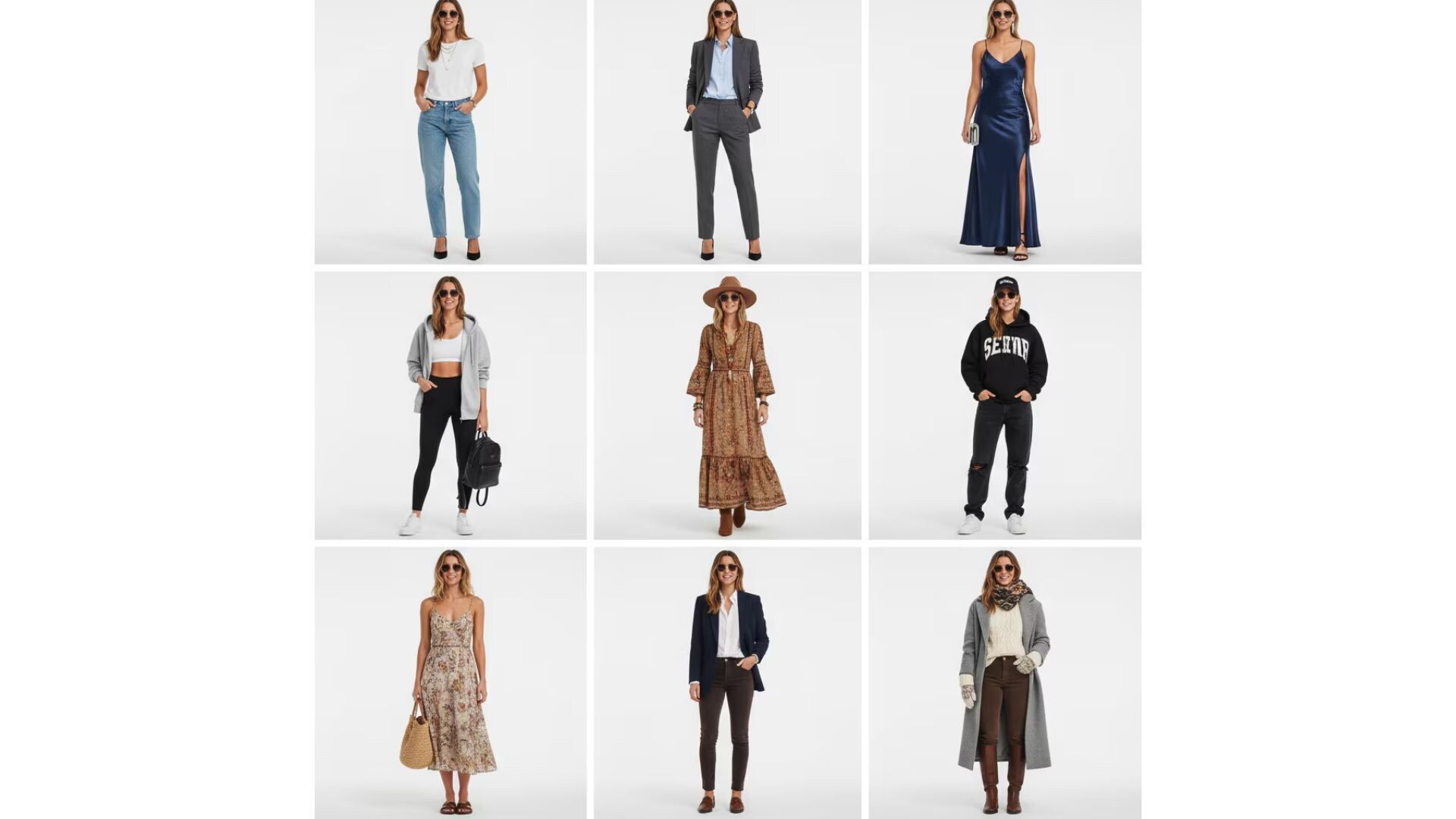

- Social Media Content: We've already seen many trends emerging from creators who use Gemini to drive viral trends. The ability to leverage its multimodal foundation is the core catalyst for these successes.

Many use Google's Gemini to accelerate the brainstorming process, so that they can rapidly prototype dozens of different visual ideas, scripts, and campaigns until they hit on the perfect concept that boosts the likelihood of being viral.

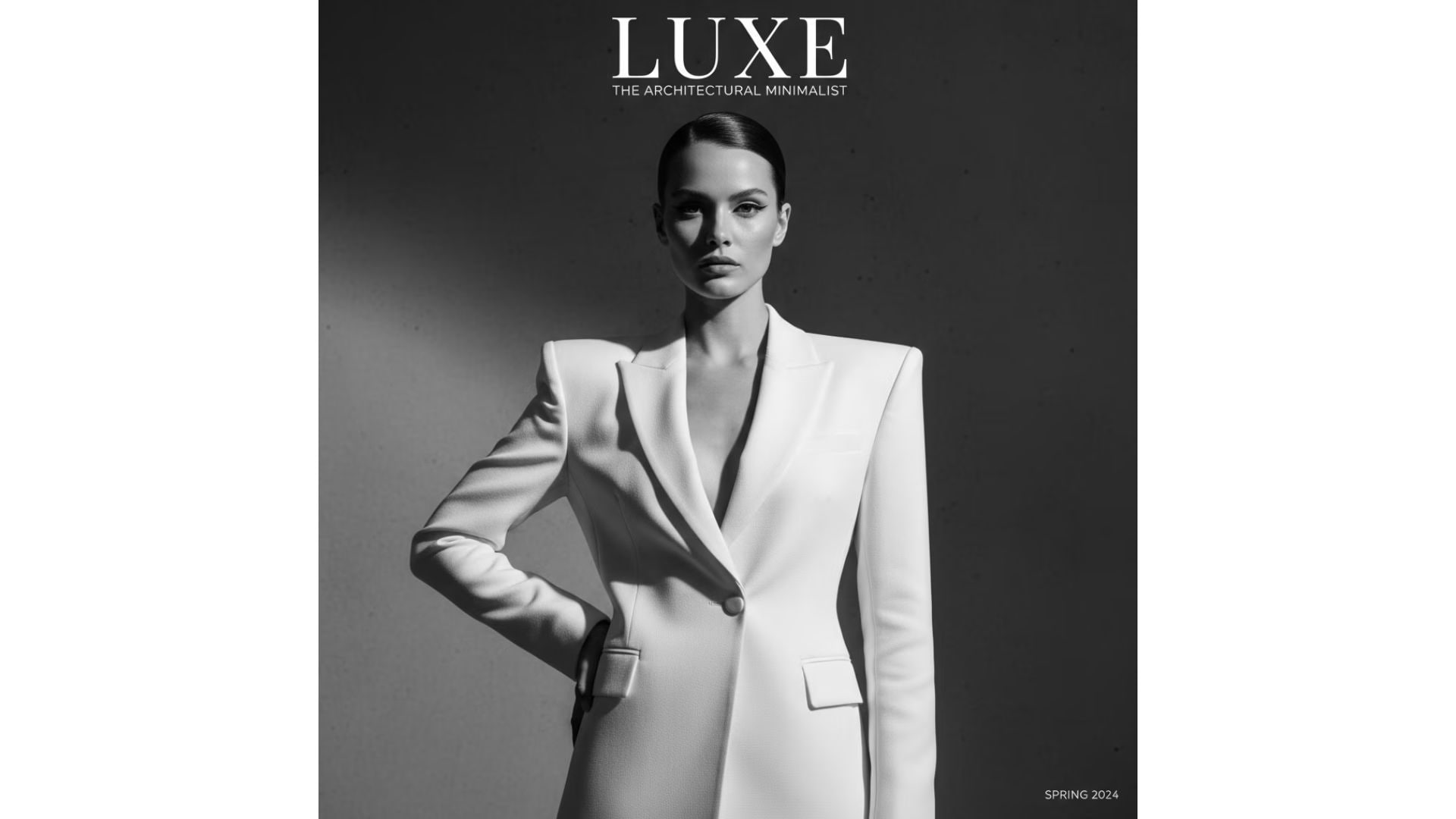

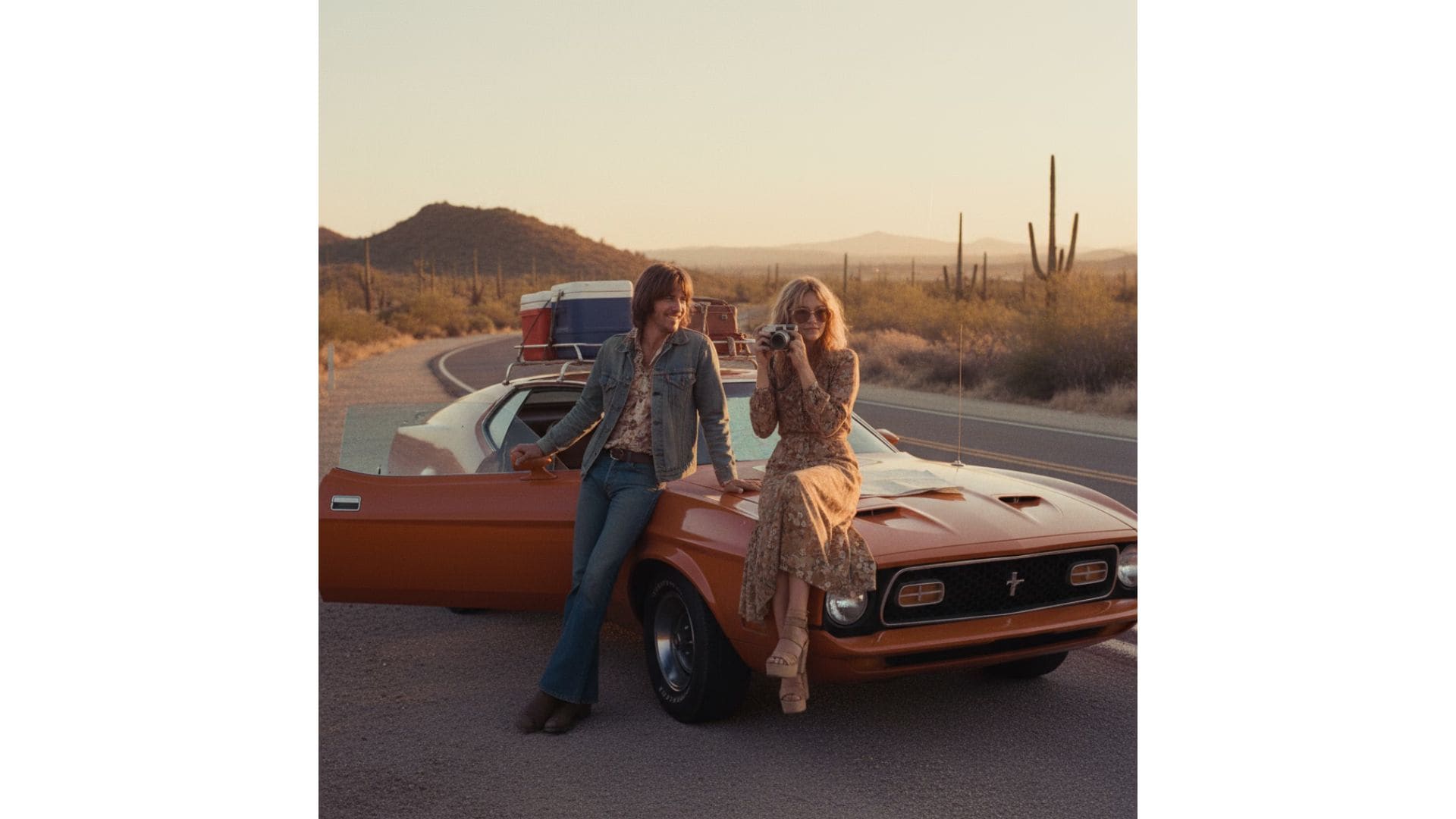

Examples of Viral Content Using Google Gemini:

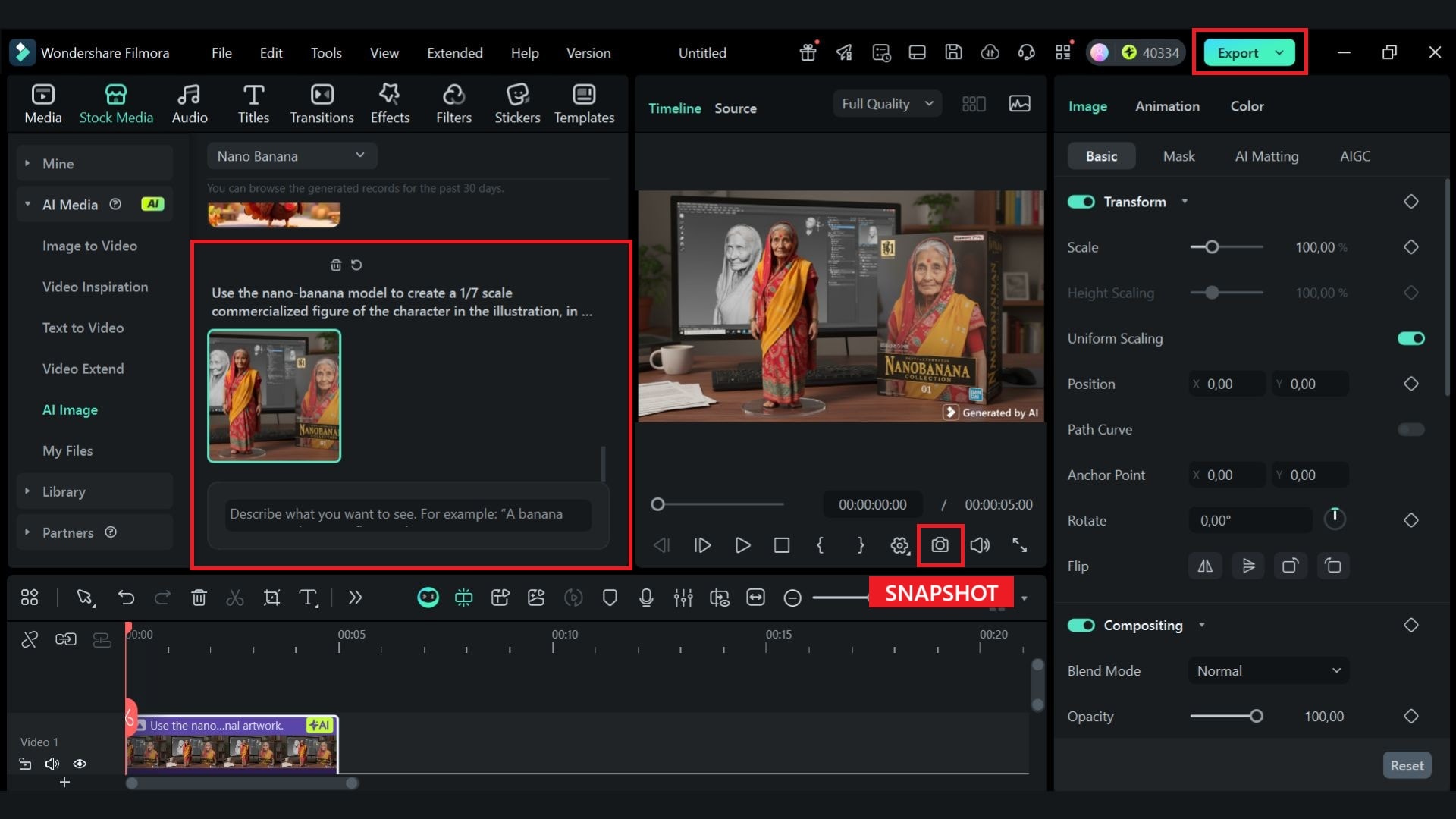

As Google Gemini is popularly used to generate and edit images, several "Nano Banana trends" have already taken off online. Now, anyone can edit and reimagine their images and create transformations in seconds, without advanced skills or heavy editing tools.

Best Prompt Techniques for Using Gemini

For a multimodal AI model like Gemini, prompts are the foundation of everything you create. If the prompt isn't clear, the results often miss the mark. A few simple techniques, though, can help you write better prompts and guide Gemini toward the output you want:

| Tip 1: Keep your phrasing natural. You don't need overly formal sentences for Gemini to understand you. Talk to it the way you normally would, and it will still follow your instructions. |

| Tip 2: Keep it simple and direct. Clear instructions work best. If something can be interpreted in different ways, reword it so the meaning is obvious. Try to avoid wording that could be interpreted in multiple ways. |

| Tip 3: Add helpful context and use strong, relevant keywords. The more background you give, the easier it is for Gemini to figure out what you're aiming for. You can also add specific keywords to help Gemini pick up on important terms and guide it toward the right type of output. |

| Tip 4: Split complicated tasks into smaller steps. If you need several things done, send them as separate prompts. Breaking them down helps Gemini stay focused and respond more accurately. It also makes it easier for you to refine the results as you go. |

| Tip 5: Mention the art style for image generation. When you're generating an image, be specific about the style you want. There are many kinds of visual styles, like hyper-realistic, cinematic, anime, retro, cyberpunk, and more. The clearer you are about the tone and look, the closer the result will be to what you imagined. |

Limitations to Be Aware Of:

As good as the results can be, there are still areas where Gemini needs improvement.

LLMs like Gemini inherently tend to "hallucinate." They can generate content that sounds authoritative and factual but is actually incorrect, nonsensical, or completely made up.

Gemini's training data reflects the biases present in the human-generated information it consumes. It requires continuous effort to manage these biases to ensure the outputs are ethical and fair across all demographics.

Gemini lacks real-world intuition or common sense. This can limit its performance or lead to errors in tasks that require practical, lived human experience.

While the model is highly creative, its output is based on learned patterns. It may struggle to generate concepts that are truly original or entirely outside the scope of its training data.

Practical Workflow Section - How to Use with Filmora

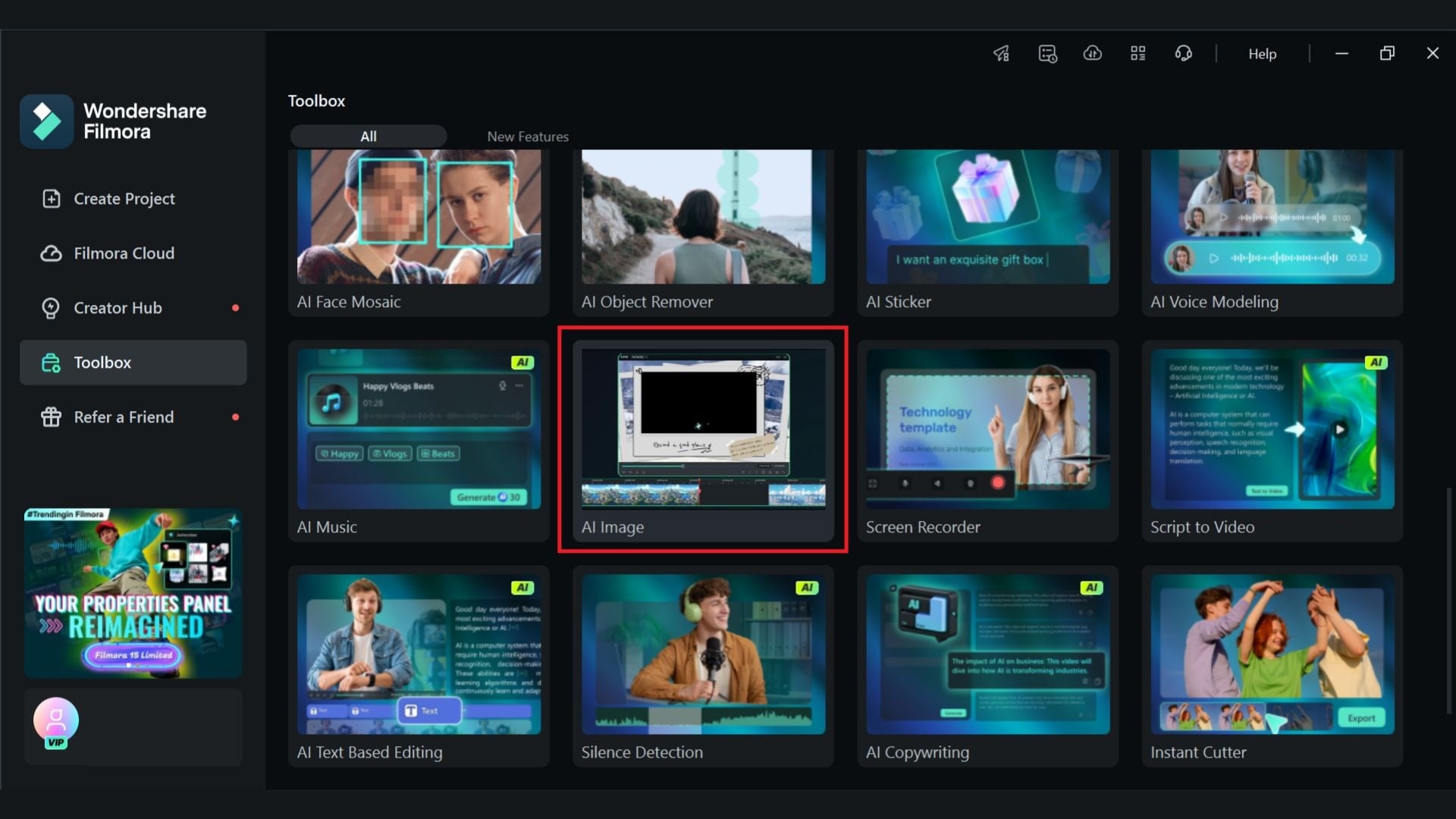

Generating images with the Nano Banana model can now be done directly in Wondershare Filmora, which makes the whole process faster and far more flexible than using it on the Gemini platform alone.

Inside Filmora, you can create your image and immediately refine it without jumping between apps. You can adjust colors, crop, add titles, apply effects, or blend it into a full video sequence in one timeline.

This setup surely removes the usual back-and-forth of downloading from Gemini. You don't have to reupload it into an editor and hope the quality holds up. Filmora keeps everything in a single workflow. Once your image is generated, you can enhance it, animate it, or build an entire scene around it.

Besides using the Nano Banana model to generate an image, you can also use Filmora to turn the image into a video using its AI Image to Video feature (powered by Veo 3, Google's Gemini video generator model).

How to Generate an Image with Nano Banana in Filmora